AI-Generated Code: When to Trust It and When to Intervene

Generative AI has entered the software development world with remarkable speed and scale. Tools like GitHub Copilot, Amazon CodeWhisperer, OpenAI Codex, and GetGenerative.ai are reshaping how code is written.

What began as auto-suggestion tools has evolved into intelligent assistants capable of writing entire classes, tests, and integrations. According to the Stack Overflow Developer Survey, approximately 82% of developers reported actively using AI tools to write code.

The productivity gains are tangible—developers report building applications in weeks that once took months, with some citing 10× increases in output. However, this rapid transformation also presents a critical question for tech leaders and engineers alike:

When should we trust AI-generated code, and when must we intervene?

This blog answers exactly that. Let’s just dive in!

The Rise of AI in Software Development

AI-generated code is no longer experimental—it’s becoming standard. Enterprises and startups alike are integrating AI agents to handle everything from scaffolding and refactoring to test generation and documentation. As per multiple surveys:

- 82% of developers use AI tools daily or weekly.

- 15% say over 80% of their current codebase includes AI-generated code.

- Half of AI adopters are from small teams (<10 developers), while a quarter of enterprises with 100+ engineers have deployed AI beyond pilot stages.

AI is now a fundamental part of the modern software development lifecycle (SDLC).

Productivity Gains: Real and Measurable

The most cited benefit of AI-generated code is efficiency.

In a case study from a mid-sized fintech startup, a solo developer using AI scaffolding built a functional, scalable web app in two weeks—a task previously estimated to take six weeks with a team of three.

This aligns with analysis from GitHub Next, which shows that teams using AI spend less time on boilerplate and more on innovation.

The implication is clear: when used right, AI not only speeds up development but enhances strategic output.

The Flip Side: When AI Goes Wrong

Despite its benefits, AI code generation is not without risk. Large language models (LLMs) like GPT-4, Codex, and Claude have inherent limitations: hallucinations, lack of full project context, inconsistent style, and an inability to reason about security policies.

1. Code Bloat and Duplication

AI frequently generates verbose code or duplicates existing patterns instead of refactoring. A study found:

- 8× increase in duplicated code blocks across AI-assisted projects.

- Repositories with high AI use had 15–50% more bugs due to redundant code.

This bloated output contributes to technical debt and creates maintainability challenges, especially when engineers trust the AI’s output without validation.

2. Lack of Global Context

LLMs—even with 100k-token windows—struggle to see and understand entire systems. This often leads to:

- Violations of naming conventions and architecture norms.

- Failure to reuse components or align with existing logic.

- Generation of inefficient or incompatible code.

3. Security Vulnerabilities

Security researchers from Georgetown’s CSET and SonarSource have flagged alarming trends:

- Nearly 50% of AI-generated code in controlled tests had exploitable bugs.

- LLMs commonly hallucinate unsafe logic: disabling input validation, hardcoding credentials, or introducing dangerous function calls.

A growing concern is “slopsquatting,” where LLMs invent plausible—but-nonexistent library names, which attackers then register as malicious packages. This threat is especially real in npm and PyPI ecosystems.

4. Compliance and IP Concerns

Organizations must also navigate legal ambiguity:

- Who owns AI-generated code? OpenAI’s terms differ from those of Amazon and Google.

- Data leakage risk arises when developers paste proprietary code into public AI tools.

- Regulatory violations (GDPR, HIPAA, SOC 2) may result from misusing external LLMs without internal governance.

Also Read – Why AI Struggles with Legacy Salesforce Orgs (And What You Can Do About It)

Trusting AI: Developer Sentiment and Behavior

Despite its power, developers remain cautious:

- Google’s 2024 DORA report: Only 24% “trust AI code a lot.”

- Infoworld’s analysis: Trust is often “partial and conditional.”

- A Stack Overflow poll: Only 2.3% highly trust AI suggestions.

When and How to Intervene: High-Risk Scenarios That Require Human Oversight

Even with clear productivity benefits, there are situations where human intervention is essential. Based on security advisories, real-world developer feedback, and expert recommendations, here are the six key scenarios where AI-generated code must be rigorously reviewed—or avoided:

1. Security-Critical Code

If the code handles:

- Authentication

- Authorization

- Data encryption

- User input validation

…then AI-generated output must be treated as untrusted until validated. AI tools are prone to introducing insecure logic, and static analysis or manual review is critical.

2. Complex Business Logic

Domain-specific functions—such as financial calculations, legal compliance rules, or logistics algorithms—require a deep contextual understanding that AI currently lacks. Always rely on SMEs and architects in these cases.

3. Architectural and Design Decisions

AI can refactor functions, but should not drive system architecture. Decisions involving data models, API boundaries, or service layers must be strategic and informed by human judgment.

4. Regulatory and IP Compliance

If your code must comply with:

- GDPR

- HIPAA

- SOX

- FINRA

- FDA 21 CFR Part 11

…then AI-generated code must go through a compliance review. Also, validate licensing and copyright implications of code snippets generated from third-party trained LLMs.

5. Production-Grade Code

When moving from prototype to production, apply the highest scrutiny to any AI-generated block. This includes CI tests, vulnerability scans, and legal review for external dependencies.

Also Read – The ROI of AI-Powered Development in Salesforce Projects

Best Practices for Managing AI-Generated Code in Teams

To safely and systematically integrate AI into the development process, organizations should adopt the following governance, tooling, and cultural best practices:

1. Treat AI Code Like External Contributions

- Use pull request reviews for all AI-generated code.

- Require a developer to explain or annotate AI output in code reviews.

- Tools like Diffblue and Sonar AI Code Assurance enforce this via rule-based workflows.

2. Automate Safety Checks in CI/CD

- Run static analysis (SAST), dynamic tests, and license checks on every AI-assisted commit.

- Integrate AI-aware linters that detect common hallucinations or vulnerabilities.

- Use scanners like Semgrep, Snyk, or GitGuardian to catch embedded secrets or unsafe logic.

3. Use Guardrails and Fine-Tuned Models

- Deploy on-prem or private models trained on your codebase.

- Apply retrieval-augmented generation (RAG) to provide project-specific context.

- Avoid generic models for regulated domains—use role- or industry-specific assistants like Code Agent in GetGenerative.ai or AWS’s CodeWhisperer for Healthcare.

4. Establish AI Governance Policies

- Define:

- What tools are approved

- What use cases are permitted

- What data is allowed in prompts

- Implement Acceptable Use Policies for AI, especially for generative tools used in production.

- Log and monitor AI tool usage to ensure compliance and transparency.

5. Encourage Developer Training

- Teach prompt engineering and AI limitations.

- Train teams to recognize hallucinated code (e.g., unknown libraries, API mismatches).

- Foster a “challenge the AI” culture, where verifying AI output is not just encouraged, but expected.

6. Incremental Rollouts and Canary Deployments

- Use feature flags, canary releases, or A/B testing to gradually deploy AI-generated modules.

- Monitor telemetry for performance degradation, error rates, and security anomalies.

- Roll back the AI-assisted code fast if instability is detected.

Also Read – Top AI Tools Every Salesforce Developer Should Know in 2025

How Top AI Tools Enable Responsible Code Generation

Tool | Strengths | Governance Capabilities | Use Case Fit |

GitHub Copilot | IDE integration, fast prototyping | Enterprise version supports policies, logs | Best for boilerplate and JS/TS dev |

Amazon CodeWhisperer | Context-aware, supports security scans | Service-linked IAM, integrates with S3/Git | AWS-native workflows |

GetGenerative.ai | Salesforce-aware, metadata-driven, code + config | Custom models, org-bound security, JIRA/GitHub integration | Salesforce projects and vibe coding |

Code Agent (GetGenerative.ai) | Prompt-to-Apex, tests, LWC, config deployment | Full traceability and role-based prompts | Complex Salesforce development |

Cursor / Replit Ghostwriter | Instant feedback for frontend/backend apps | Limited governance (for now) | Startups, solo devs |

Final Words

AI-generated code holds the potential to transform software development—but only if adopted thoughtfully. The future of coding is not fully automated; it’s AI-assisted and human-curated.

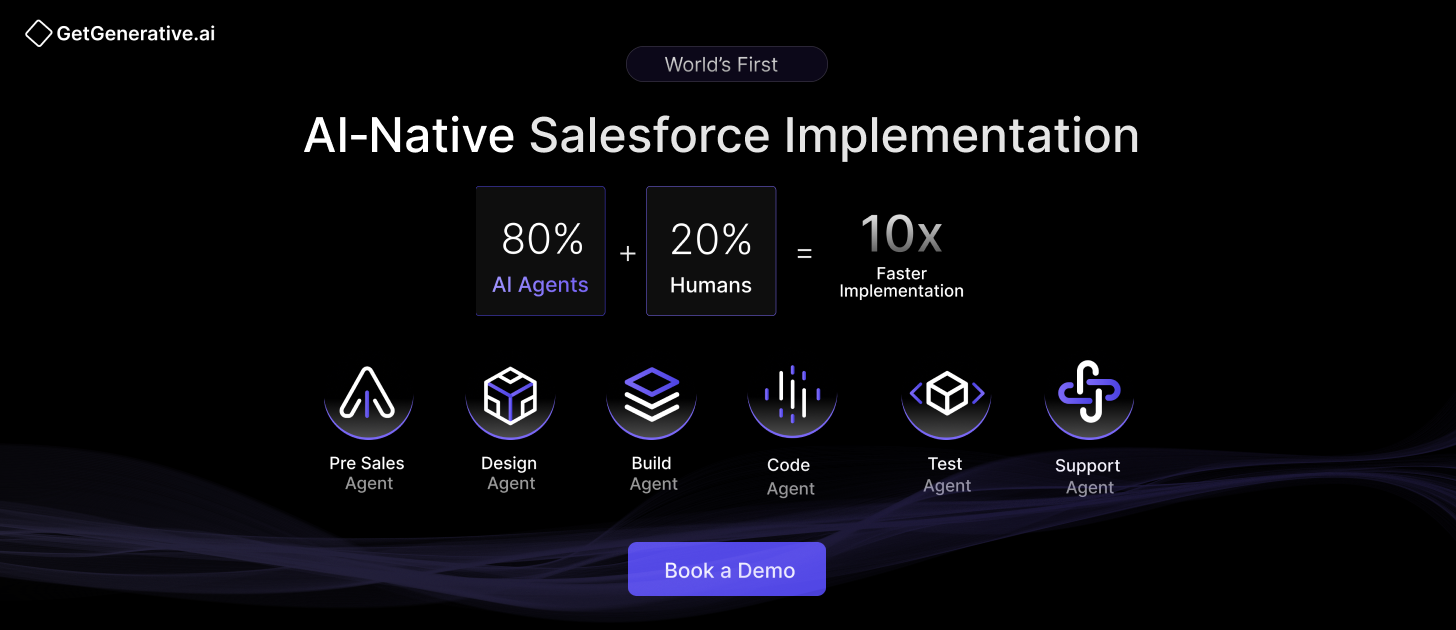

At GetGenerative.ai, we’ve reimagined Salesforce implementation—built from the ground up with AI at the core. This isn’t legacy delivery with AI added on. It’s a faster, smarter, AI-native approach powered by our proprietary platform.

👉 Explore our Salesforce AI consulting services