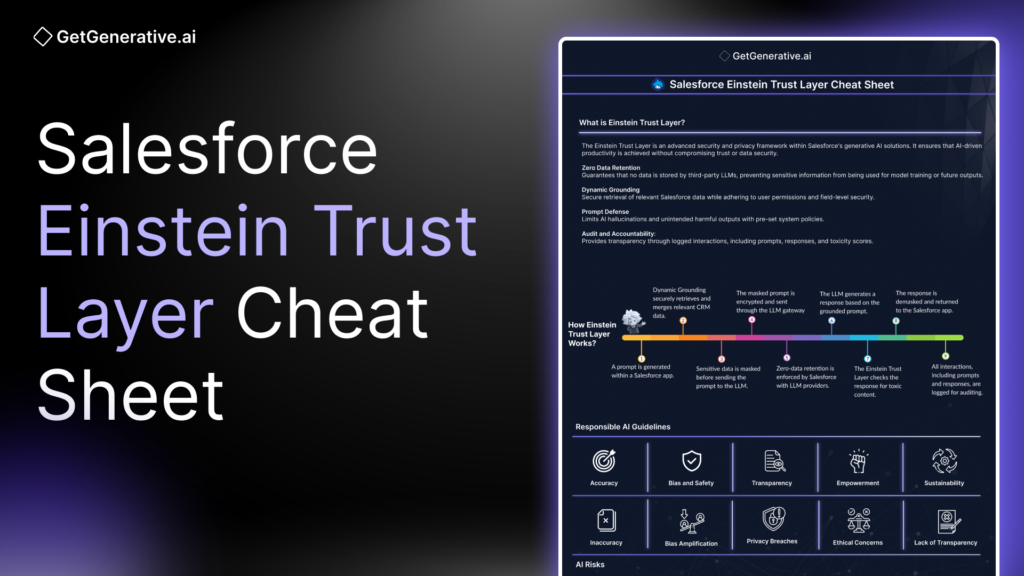

Salesforce Einstein Trust Layer Cheat Sheet

Salesforce’s Einstein Trust Layer plays a critical role in ensuring data privacy and security within generative AI processes. This blog will guide you through the key features, setup, and best practices for leveraging the Einstein Trust Layer to safeguard your organization’s AI interactions.

What You Will Learn:

- Key features of the Einstein Trust Layer and how it ensures AI security

- Step-by-step guide to setting up the Trust Layer and Einstein Generative AI

- How to audit AI data and monitor user feedback effectively

- Best practices for ensuring responsible AI usage in your Salesforce environment

What is the Einstein Trust Layer?

The Einstein Trust Layer is a critical security framework integrated within Salesforce’s generative AI solutions. It enhances data privacy, ensures secure AI interactions, and maintains trust throughout AI-driven processes. This layer enables organizations to harness the power of AI while upholding Salesforce’s core value of Trust by providing a safe and secure environment for AI usage.

Key features of the Einstein Trust Layer include:

- Zero Data Retention: Ensures that sensitive information is not retained by third-party large language models (LLMs), preventing the use of your data for training or improvements.

- Dynamic Grounding: Safeguards secure data retrieval, merging Salesforce data with prompts while adhering to user permissions and field-level security.

- Prompt Defense: Implements system policies that limit AI hallucinations and prevent harmful or unintended outputs.

- Audit and Accountability: Tracks prompts, responses, and toxicity scores to ensure transparency, with reporting tools available for analysis.

Source: Salesforce

Why the Einstein Trust Layer Matters

The Einstein Trust Layer goes beyond basic security measures by adapting to the specific needs of each organization. Through dynamic data retrieval, data masking, and real-time adaptability based on user permissions, organizations can safely scale their AI initiatives without compromising security. This enables the responsible use of AI while maximizing productivity and ensuring data privacy.

How the Einstein Trust Layer Works

The Einstein Trust Layer secures data as it moves through various stages of the generative AI process. The entire journey, from prompt creation to response delivery, is secured to protect sensitive information. Here’s how it works:

1. Prompt Journey

- A prompt is generated from a Salesforce app, such as the Service Console, using a pre-built template.

- The prompt is dynamically grounded using relevant CRM data (e.g., customer records, flows, knowledge articles), while secure data retrieval ensures that user permissions and access controls are respected.

- Sensitive data is identified and masked before being sent to the LLM.

2. LLM Gateway

- The masked prompt passes through the secure LLM Gateway, where it is encrypted before being sent to the connected LLM (e.g., OpenAI).

- Salesforce enforces a zero-data retention policy with external LLM providers, ensuring that no data is stored or used for training.

3. Response Generation

- The LLM generates a response based on the grounded prompt and sends it securely back through the same gateway.

- Before reaching the user, the Einstein Trust Layer applies additional checks such as toxic language detection to ensure the content is safe.

4. Response Journey

- The response is demasked, and placeholders are replaced with original data for personalization.

- The response is delivered back to the Salesforce app for the end user to view and use.

- An audit trail logs every interaction, including the original prompt, masked prompt, toxicity scores, and feedback data for accountability and analysis.

Source: Salesforce

Use Case Example: Enhancing Customer Support

A global e-commerce company aims to improve customer support by generating AI-driven responses that are personalized and contextually grounded in real-time customer data.

Solution: The company uses Dynamic Grounding through the Einstein Trust Layer. By dynamically retrieving relevant data such as order history and previous interactions from Salesforce, the Trust Layer integrates this data into prompts. This ensures that the AI-generated responses are accurate, personalized, and tailored to the customer’s specific inquiry.

Also Read – The Ultimate Salesforce Consultant Cheat Sheet 2024

Create Responsible Generative AI: Ensuring Trust, Safety, and Ethics

Generative AI offers transformative capabilities for creating content, driving customer engagement, and streamlining business processes. However, the powerful nature of this technology also introduces ethical and operational risks. Salesforce addresses these challenges by embedding responsible AI principles into its generative AI tools.

These principles ensure that trust, safety, and ethical standards are maintained throughout AI applications, helping to mitigate risks such as bias, accuracy issues, privacy breaches, and environmental impact.

Key Considerations for Responsible Generative AI

1. Accuracy

Generative AI relies on vast datasets for content generation, but this can sometimes result in outputs that are not factually accurate. To mitigate this risk:

- Ensure clear guidelines are in place for citing sources.

- Validate AI-generated responses through human review.

2. Safety and Bias

Preventing bias, toxicity, and privacy risks is crucial for maintaining the integrity of AI-driven content. To ensure safety:

- Implement regular assessments and red teaming.

- Establish robust guardrails to prevent harmful or biased outcomes.

3. Honesty and Transparency

To avoid confusion between AI-generated and human-generated content:

- Clearly indicate AI-generated content with disclaimers or watermarks.

- Ensure users are aware of the origin of the content.

4. Empowerment

Strive for a balance between AI automation and human judgment by:

- Allowing users to enhance their work with AI while retaining control over key decisions.

- Encouraging collaboration between AI and human expertise.

5. Sustainability

The environmental impact of AI models should not be overlooked. To maintain sustainability:

- Prioritize building right-sized AI models that optimize performance while minimizing environmental impact.

- Use computational resources efficiently to balance AI capabilities with sustainability goals.

Risks of Generative AI

While generative AI can greatly enhance productivity, it also presents risks that require careful oversight and safeguards.

- Inaccurate Information: Generative AI may produce convincing yet factually incorrect content. Without proper validation, this can lead to the spread of misinformation.

- Amplified Bias: AI models trained on biased data may unintentionally perpetuate or amplify societal biases, resulting in unfair outcomes. Continuous tuning and oversight are essential to prevent this.

- Privacy Breaches: Without proper data management, generative AI models can leak sensitive information. Enforcing strict data masking and zero-data retention policies is crucial to safeguard privacy.

- Ethical Dilemmas: Automating decisions in sensitive areas such as healthcare or finance can introduce ethical challenges. Human oversight is necessary to ensure that AI-generated decisions align with ethical standards.

Also Read – Einstein Generative AI Features Across Salesforce Clouds – The Ultimate Guide

Setting Up the Einstein Trust Layer

Setting up the Einstein Trust Layer is crucial to ensuring security and privacy controls are aligned with your organization’s policies. The setup process involves configuring Einstein Generative AI, Data Cloud, and adjusting settings like data masking to protect sensitive information. While the Trust Layer supports sandbox environments for testing, it comes with certain limitations.

Key Considerations for Setting Up Einstein Trust Layer

1. Prerequisites: Before setting up the Trust Layer, ensure that both Einstein Generative AI and Data Cloud are configured. Data Cloud is essential for enabling features such as data masking and audit trails.

2. Sandbox Environment Limitations: While sandbox environments are supported, some key features are not available for testing, such as:

- LLM Data Masking configuration within the Einstein Trust Layer setup.

- Grounding on objects within Data Cloud.

- Logging and reviewing audit and feedback data in Data Cloud.

3. Multi-Language and Region Support: The Trust Layer supports data masking and toxicity detection across multiple languages and regions. However, certain entries like US-specific IDs and ITINs are only supported in English.

Insight

While detection models within the Trust Layer have shown effectiveness based on internal testing, they are not foolproof. Cross-region and multi-country use cases may introduce challenges in accurately detecting specific data patterns. Continuous evaluation and refinement are essential to maintain trust and improve detection over time.

Setting Up Einstein Generative AI

Configuring Einstein Generative AI opens the door to AI-driven features across your Salesforce environment. Proper setup ensures the integration of generative AI capabilities while maintaining data privacy and compliance.

Key Steps for Setting Up Einstein Generative AI

- Verify Data Cloud in Your Org: Ensure that Data Cloud is provisioned and enabled. This is a prerequisite for using Einstein Generative AI functionalities, including the Trust Layer.

- Turn On Einstein Generative AI: Enable generative AI features across Salesforce by turning on Einstein. This step syncs Einstein with Data Cloud, unlocking its AI capabilities.

- Set Up Einstein Trust Layer: Configure the Trust Layer to integrate data privacy controls, ensuring secure and compliant AI operations across the platform.

- Turn On Einstein Data Collection and Storage: Enable the collection and storage of generative AI audit and feedback data in Data Cloud. This allows you to monitor AI performance, ensure compliance, and fine-tune AI responses for better outcomes.

Auditing Generative AI Data

Auditing Generative AI Data is a critical step in tracking and storing information related to the performance and safety of generative AI within your Salesforce org. It includes tracking prompt templates, response metadata, toxicity scores, and user interactions.

Key Considerations for Auditing Generative AI Data

Types of Data Collected

There are two main types of data collected:

- Generative AI audit data: This includes prompt details, masked/unmasked responses, and toxicity scores.

- Feedback data: Captures user interactions like thumbs up/down and response modifications.

Data Collection and Storage: All data is stored in Data Cloud, with streams refreshed hourly. Data can be accessed through Data Cloud reporting tools, and stored data can be deleted when necessary.

Billing Considerations: Storing and processing generative AI audit data consumes Data Cloud credits, particularly during data ingestion, storage, and query operations.

Credit Usage: Monitoring credit usage is essential, as ingesting and processing generative AI audit data can lead to increased billing impacts. Regular reviews can help assess these impacts and manage costs.

Data Retention: Data stored in Data Cloud can be deleted, but careful management is necessary to avoid unintentionally removing critical audit information.

Insight

To enable Einstein Generative AI data collection, ensure that Data Cloud is provisioned and Einstein is activated in your org. From the Setup menu, navigate to Einstein Feedback, and on the setup page, turn on Collect and Store Einstein Generative AI Audit Data. Data will begin appearing in Data Cloud within 24 hours, providing the foundation for audit and feedback analysis.

Use Case Example: Monitoring AI Interactions

A retail company wants to monitor the safety and performance of AI-generated customer interactions to ensure compliance and optimize response quality.

Solution: The company uses Data Cloud to track audit data, such as prompt details, response scores, and feedback interactions. This enables them to generate reports, analyze AI behavior, and make data-driven adjustments to improve the customer experience while staying compliant with privacy regulations.

Also Read – The Ultimate Salesforce Einstein AI Cheat Sheet

Report AI Audit and Feedback Data

Reporting on AI audit and feedback data in Salesforce provides vital insights into the performance and safety of generative AI features. By leveraging pre-built dashboards and reports in Data Cloud, you can monitor key metrics such as data masking trends, toxicity detection, and user feedback. These tools allow for a comprehensive understanding of AI interactions and help ensure responsible AI usage across your organization.

Key Dashboards and Reports

1. Einstein Generative AI and Feedback Data Dashboard

This dashboard tracks weekly user engagement and request trends to the large language model (LLM). It includes:

- Weekly count of users interacting with generative AI features.

- Weekly count of requests made to the LLM.

- Total user feedback collected by feature.

- Weekly token usage to monitor AI consumption.

2. Einstein Trust Layer Dashboard

This dashboard highlights data masking trends, toxic response detection, and concerning patterns across various time frames.

- Data masking trends over 7 days, 30 days, and all time.

- Toxic response trends categorized over 7 days, 30 days, and all time.

- Data masking and toxic response trends by feature, allowing you to see which areas may need additional oversight.

3. Toxicity Detection in Responses Report

This report provides detailed views of toxic responses detected by the AI. It includes:

- The exact response text.

- The number of toxic detections by feature for in-depth analysis.

4. Masked Prompt and Response Report

This report offers a comprehensive view of how prompts and responses are processed, including:

- Masked and unmasked data to show what sensitive information was protected before sending to the LLM.

- How responses were personalized for the end-user.

Insights for Reporting

To access Einstein Generative AI audit and feedback data, first ensure that data collection is enabled. Navigate to the App Launcher, search for “Data Cloud,” and explore the Dashboards or Reports tabs. Search for “Einstein Generative AI” within all folders to view detailed metrics on AI performance, user feedback, and audit data.

You can automate the monitoring of generative AI audit and feedback data by setting up flows in Data Cloud. For example, if toxicity scores for responses exceed a specific threshold for several consecutive days, you can trigger an alert for investigation. This proactive approach ensures that AI outputs remain aligned with your organization’s ethical and safety standards, maintaining trust and reliability.

Data Model for Generative AI Audit and Feedback

To fully analyze your Einstein Generative AI audit and feedback data, understanding the relevant Data Model Objects (DMOs) is crucial. These objects store audit and feedback data in Data Lake Objects (DLOs) and map them to DMOs for detailed analysis.

Key DMOs and Their Attributes

1. Einstein Generative AI App Generation DMO

Captures feature-specific changes made to the original generated text.

- Key attributes: Generation type, text versions, and modification details.

2. Einstein Generative AI Content Category DMO

Stores safety and toxicity score data.

- Key attributes: Detector type, sub-category, and scoring details.

3. Einstein Generative AI Content Quality DMO

Captures whether a request or response is marked as safe or unsafe.

- Key attributes: Request safety indicators and content flags.

4. Einstein Generative AI Feedback DMO

Stores user feedback related to specific generated content.

- Key attributes: Feedback type, response ID, and feedback timestamp.

5. Einstein Generative AI Feedback Detail DMO

Provides detailed user feedback, including sensitive information if applicable.

- Key attributes: User input details, feedback reasons, and comment specifics.

6. Einstein Generative AI Gateway Request DMO

Captures prompt inputs, request parameters, and model details.

- Key attributes: Prompt content, model settings, and request metadata.

7. Einstein Generative AI Gateway Request Tag DMO

Stores custom data points for more tailored analysis.

- Key attributes: Tag names and associated values.

8. Einstein Generative AI Gateway Response DMO

Enables deeper analysis by joining request and generation data.

- Key attributes: Response IDs, processing time, and response quality indicators.

9. Einstein Generative AI Generations DMO

Captures generated responses, including masked prompts if masking is enabled.

- Key attributes: Generation output, masked data, and user interaction records.

Key Benefits of the Einstein Trust Layer for Organizations

Implementing the Einstein Trust Layer offers organizations significant advantages, ensuring a seamless and secure adoption of generative AI across Salesforce environments. Here’s a breakdown of the key benefits:

Enhanced Data Privacy

The Einstein Trust Layer ensures that sensitive data is never retained by third-party LLMs, safeguarding confidential information from being reused or compromised. This robust privacy protection aligns with regulatory standards and fosters client trust.Improved Compliance

By adhering to strict policies on data masking and zero data retention, the Trust Layer simplifies compliance with global data protection laws like GDPR, CCPA, and HIPAA. Salesforce’s audit trail features also make it easier to document compliance efforts for external audits.Dynamic Personalization at Scale

Leveraging Dynamic Grounding, the Einstein Trust Layer combines secure Salesforce data with AI-generated prompts, enabling highly personalized, context-aware responses without breaching user permissions or exposing sensitive data.Transparency Through Accountability

The built-in auditing and reporting tools provide comprehensive insights into AI interactions, including prompt history, response quality, and toxicity scores. This fosters accountability and equips organizations with actionable data to optimize AI performance.Mitigation of Ethical Risks

The Trust Layer’s real-time toxicity detection and response validation features help prevent the dissemination of harmful or biased content. These safeguards ensure that generative AI outputs align with ethical standards and organizational values.Scalable AI Adoption

With region-specific support for multi-language environments and adaptability to organizational needs, the Einstein Trust Layer empowers businesses to scale their AI initiatives securely and effectively.

Conclusion

The Einstein Trust Layer is an essential component for ensuring secure, ethical, and efficient AI-driven processes in Salesforce. By implementing this framework, you can maintain data privacy, prevent AI biases, and foster trust within your organization.

Maximize your Salesforce AI implementation with GetGenerative.ai. Effortlessly craft outstanding proposals, enabling you to dedicate more time to providing exceptional client service.

Get started now!