Salesforce Trusted AI Principles

Salesforce is committed to responsible and ethical AI practices. The company has crafted its Trusted AI Principles to ensure that its AI technologies not only drive innovation but also uphold values of trust, fairness, and inclusivity.

Let’s explore these principles and their implications in detail.

Understanding Salesforce’s Trusted AI Principles

Salesforce’s Trusted AI Principles revolve around five core tenets: Responsible, Accountable, Transparent, Empowering, and Inclusive. These principles are designed to foster ethical AI practices while aligning with the company’s overarching values of trust, customer success, innovation, equality, and sustainability.

5 Core Principles of Trusted AI

1: Responsible — The Foundation of Ethical Decision-Making

Salesforce starts with the question: not “Can we build this?” but “Should we build this?”

This shift operationalizes ethics at the product design stage, embedding systematic checkpoints that prevent harmful AI applications before they ever reach market.

Key Practices:

Collaboration with external human rights experts

Continuous learning frameworks to evolve safeguards

Proactive bias mitigation tools built in from day one

For enterprises, this means inheriting pre-built safeguards instead of scrambling to retrofit compliance later. Salesforce also extends responsibility to customer education, arming organizations with resources to make informed AI adoption decisions.

Takeaway:

Executives should establish similar philosophical foundations:

Secure executive sponsorship for ethical AI initiatives

Allocate dedicated budgets for bias testing

Partner with domain experts to assess societal impact before deployment

2: Accountable — Oversight and Continuous Improvement

Accountability isn’t just a policy—it’s a system. Salesforce enforces it through:

Structured feedback loops with customers

Industry collaboration in working groups

Ongoing model and training data reviews across the AI lifecycle

This approach tackles a major gap: 36% of organizations lack clear AI governance ownership.

The Einstein Trust Layer brings accountability to life with:

Audit trails for every AI decision, enabling post-event analysis

Documentation to support both regulatory compliance and operational excellence

Takeaway:

Accountability frameworks can satisfy compliance demands and boost innovation when they include:

Executive oversight

Technical review gates

External validation

3: Transparent — Building Trust Through Explainability

Transparency means more than technical docs—it’s about explanations people can actually understand.

Salesforce Implementation:

Model cards outlining creation process, intended use, and limitations

Real-time citations and source attributions via the Einstein Trust Layer

Clear disclosure when AI contributes to customer interactions (critical since 54% of consumers can now spot AI content)

Takeaway:

Adopt frameworks that balance plain-language clarity with technical accuracy. Model cards are a strong template for aligning technical teams, business stakeholders, and regulators.

4: Empowering — Democratizing AI While Maintaining Control

Salesforce proves that AI accessibility and governance can coexist.

Through Trailhead and low-code AI tools, the company empowers non-technical teams to innovate while the Einstein Trust Layer enforces:

Enterprise-grade security

Regulatory compliance

Governance baked into every workflow

This is crucial for the 36% of organizations lacking AI expertise—democratization fills the skills gap without compromising control.

Takeaway:

Well-designed empowerment strategies can speed up AI adoption while upholding governance standards.

5: Inclusive — Ensuring AI Benefits Everyone

Inclusivity means designing for all stakeholders from the start.

Salesforce Practices:

Diverse dataset testing

Consequence scanning workshops

Inclusive team composition requirements

Embedded fairness testing and bias mitigation algorithms

Bias can emerge from training data, algorithms, deployment contexts, or user interactions—Salesforce tackles it from all angles.

Takeaway:

Inclusion requires both technical controls and organizational processes to measure and maintain equity in outcomes.

Also Read – How To Become an AI Consultant: A Complete Guide

The Einstein Trust Layer

Salesforce’s Einstein Trust Layer is the architectural engine that operationalizes these principles.

1. Secure Data Retrieval & Dynamic Grounding

Honors existing Salesforce permissions and role-based access control

Dynamically enriches AI prompts with business context without exposing private data

Allows AI deployment without restructuring data governance

2. Data Masking & Zero Retention Policies

Uses pattern-based and ML-powered detection to remove PII, PCI, and other sensitive data before LLM processing

Enforces zero data retention with external providers—prompts and outputs are not stored

Addresses 78% of consumers’ privacy concerns about AI

3. Toxicity Detection & Audit Capabilities

Real-time scanning for harmful or biased content in both inputs and outputs

Adversarial testing to identify vulnerabilities before exploitation

Full audit trails for compliance, post-incident analysis, and system improvements

Challenges in Building Trusted AI

While Salesforce leads the way in ethical AI, the journey isn’t without hurdles. Here are some key challenges:

1. Bias in Data and Algorithms

AI systems learn from data, which can sometimes carry inherent biases. Salesforce combats this by:

- Developing tools to flag bias in datasets.

- Promoting diverse and representative data for training models.

2. Balancing Innovation and Ethics

Pushing the boundaries of innovation while adhering to ethical standards is a delicate act. Salesforce strikes this balance by:

- Prioritizing long-term trust over short-term gains.

- Regularly updating AI policies to align with evolving ethical standards.

Also Read – Guidelines for Ethical and Responsible AI Development

Conclusion

Salesforce’s Trusted AI Principles are a testament to its commitment to ethical innovation. By prioritizing responsibility, accountability, transparency, empowerment, and inclusivity, Salesforce ensures its AI technologies drive progress while upholding core human values. As AI continues to evolve, Salesforce’s leadership in ethical AI sets a powerful example for the tech industry.

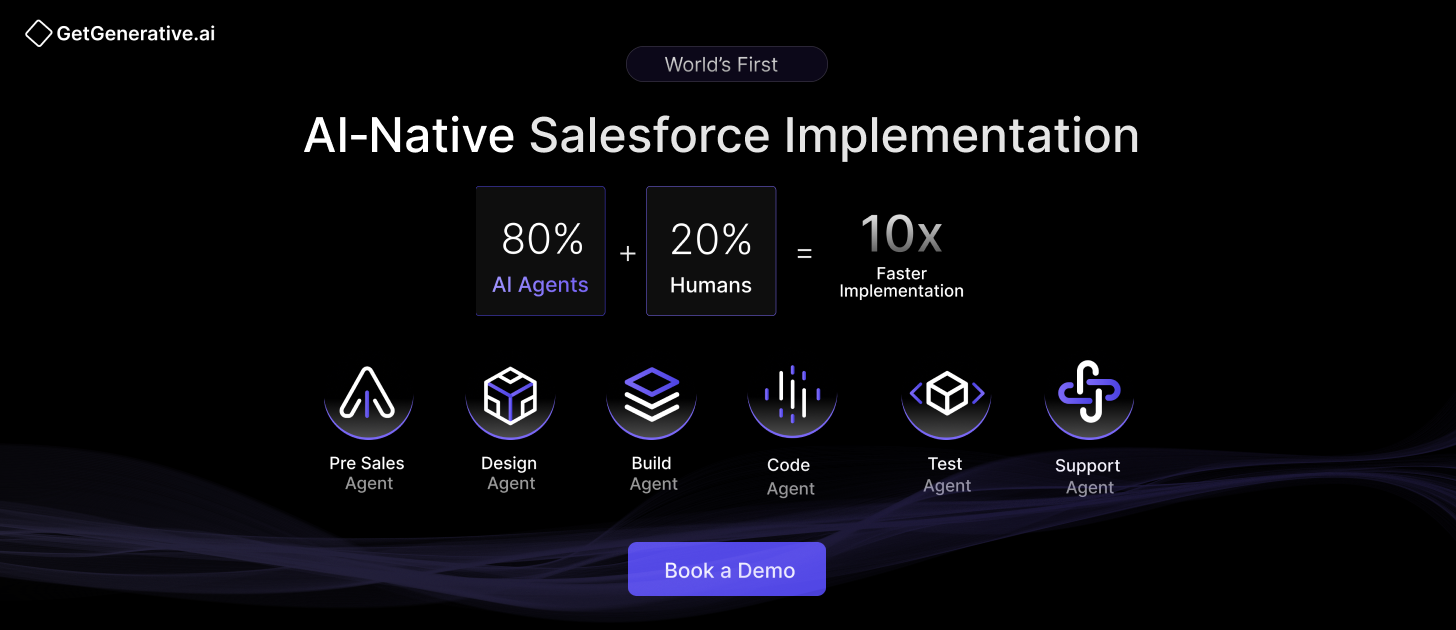

At GetGenerative.ai, we’ve reimagined Salesforce implementation—built from the ground up with AI at the core. This isn’t legacy delivery with AI added on. It’s a faster, smarter, AI-native approach powered by our proprietary platform.

👉 Explore our Salesforce AI consulting services

FAQs

1. What are Salesforce’s Trusted AI Principles?

Salesforce’s Trusted AI Principles include Responsibility, Accountability, Transparency, Empowerment, and Inclusivity, aimed at ensuring ethical AI usage.

2. How does Salesforce ensure AI transparency?

Salesforce publishes model cards detailing AI creation, intended uses, and performance, ensuring users understand AI-driven decisions.

3. What is the role of inclusivity in Salesforce’s AI?

Inclusivity ensures AI reflects diverse values, promotes equality, and benefits a wide range of users through representative data and diverse teams.

4. How does Salesforce empower non-technical users?

Salesforce simplifies AI with tools that require no coding and provides free education through its Trailhead platform.

5. What’s next for Salesforce’s Trusted AI?

Salesforce plans to advance ethical AI practices by refining governance frameworks and collaborating with global stakeholders to set new standards.