Can Vibe Coding Work in Regulated Industries? Here’s What You Need to Know

Vibe coding—a paradigm where developers generate software through natural language prompts to AI—is transforming the way code is created.

GitHub’s research shows that Copilot users complete coding tasks 55% faster, while Y Combinator notes that a quarter of Winter 2025 startups built products primarily using AI-generated code. Even Google’s CEO confirmed that 25% of new code commits across Google are now AI-suggested.

However, this speed introduces new challenges, particularly in regulated sectors like healthcare, finance, defense, and energy. These industries require robust compliance, traceability, and software quality that often clash with Vibe Coding’s loose, experimental nature.

This blog dives into whether vibe coding can thrive in such contexts. We’ll cover:

- What vibe coding actually is

- The specific compliance and governance demands of regulated industries

- The friction points between AI-generated code and regulatory standards

- Where vibe coding can still add value (when done responsibly)

- How to implement vibe coding safely using tools, policies, and governance frameworks

Let’s start by breaking down the paradigm.

What Is Vibe Coding? A New Development Mindset

Vibe coding is a prompt-based software development model where AI systems (like LLMs) take the lead in producing code based on natural language input.

Instead of writing syntax manually, a developer might instruct the AI:

“Create a patient registration form with email validation and success messages.”

The AI then returns a working code block, and the developer continues refining it via further prompts—“Add a password strength meter,” “Fix the CSS layout,” etc.

Key Characteristics:

- AI as a co-creator, not just a suggestion engine

- High-level intent, not low-level logic

- Rapid prototyping, not upfront architecture

- Prompt iteration, not manual refactoring

This creative loop allows developers to stay in “flow,” using the AI to spin up UIs, APIs, and even backend logic with minimal friction. Unlike autocomplete tools like CodeWhisperer or Copilot, vibe coding treats the AI as a collaborative agent, not just a productivity aid.

But this hands-off style also skips core engineering practices like test coverage, design documentation, or dependency control, raising serious red flags for regulated industries.

Why Regulated Industries Have Higher Stakes

Healthcare, finance, defense, pharmaceuticals, and other sectors operate under strict legal, ethical, and operational standards. These industries:

- Handle sensitive data (PHI, PII, banking records)

- Require code traceability and risk analysis

- Demand security assurance, especially for safety-critical or life-impacting systems

- Must comply with regulations like:

- HIPAA, GDPR, SOX, FDA 21 CFR Part 11, PCI-DSS, ISO/IEC 62304, FedRAMP, and more

For example:

- Medical device software must map every requirement to specific tests and code modules (per FDA/IEC 62304).

- Banking systems must log all data access and changes to meet SOX and PCI obligations.

- Defense systems require FedRAMP or NIST 800-53 adherence, ensuring zero unknown risks in the software stack.

The mismatch: Vibe coding does not inherently document its logic or rationale. Prompt histories are not substitutes for structured requirement specifications. Nor does AI output guarantee alignment with internal security policies or software engineering standards.

Also Read – Vibe Coding in Salesforce: What It Is and Why It’s the Future

Why Even Regulated Industries Are Interested

Despite the compliance friction, vibe coding offers undeniable advantages—even in conservative industries:

- 73% of developers say AI tools reduce frustration (GitHub, 2024)

- Copilot users complete coding tasks 55% faster on average

- Replit claims 3.5+ hours saved per developer per week using their AI pair-programmer

- AI coding assistants can scaffold dashboards, interfaces, reports, and scripts from scratch

- Subject matter experts (e.g. clinicians, analysts) can build tools without writing code

Key Use Case: In healthcare, AI can rapidly prototype an EMR interface using fake data. Later, compliance teams replace the prototype with production-ready, validated components. In finance, a team can use vibe coding to generate Excel importers, JSON validators, or report templates—freeing engineers for higher-risk features.

The point is not to avoid compliance, but to shift human effort toward the critical parts of the system.

The Core Challenges in Regulated Settings

Despite productivity gains, vibe coding presents five serious risks for regulated organizations:

1. Auditability & Traceability

- Regulators expect every code line to map back to a requirement, test, and approval

- AI-generated code often has no traceable origin or documentation

- “Why was this implemented? Who reviewed it?”—common questions during audits that vibe coding can’t answer easily

2. Security & Code Quality

- Research shows 40–52% of AI-generated code includes bugs or vulnerabilities

- Copilot has previously suggested insecure code (e.g. hardcoded secrets, outdated crypto)

- In regulated systems, one unvalidated line of code could cause compliance failure or even patient harm

3. Licensing & IP Issues

- AI models trained on public code may reproduce copyrighted or GPL-licensed snippets

- Using such code unknowingly could open regulated firms to lawsuits and IP audits

4. Data Governance Risks

- Many AI assistants send prompts to third-party servers (ChatGPT, GitHub, etc.)

- Submitting PHI, PII, or sensitive operational data may violate HIPAA, GDPR, or banking secrecy laws

- On-prem LLMs or air-gapped tools are often required

5. Liability & Human Accountability

- If AI-generated code causes an incident, the liability rests with your organization

- No LLM vendor accepts responsibility for bugs or breaches

- Developers must assume ownership, even when they didn’t write the code line-by-line

Gartner’s 2025 advisory: “Organizations must not trade speed for control. In highly regulated workflows, human accountability cannot be abstracted away.”

Where Vibe Coding Can Work (With Guardrails)

While vibe coding isn’t a fit for every use case, there are practical low-risk areas where regulated organizations can use it effectively:

1. Rapid Prototyping and MVP Development

Early-stage prototypes and proofs-of-concept don’t require full compliance. Vibe coding is ideal here:

- A medical startup might use AI to scaffold a patient dashboard UI in minutes

- Bank IT teams can build mock automation flows or internal portals for stakeholder demos

- Pharma R&D teams might simulate workflows using synthetic data to explore new UI/UX designs

Caveat: These prototypes must never go into production without review. Instead, they serve as fast concept starters.

2. Internal, Non-Critical Tools

Not all software in regulated firms is subject to intense scrutiny:

- Internal analytics scripts

- Admin dashboards

- Backend schedulers or extract-transform-load (ETL) pipelines

These tools still benefit from code quality, but pose less direct risk to users or compliance. Vibe coding here can free up engineers for compliance-heavy systems.

3. Human-in-the-Loop Development

This is the safest and most scalable approach:

- Developers use LLMs for drafts

- But every output is manually reviewed, tested, and documented before approval

This ensures compliance while still reaping AI productivity benefits. In practice:

- Prompt → AI draft → Static analysis → Manual review → Approval

As Cycode’s AI DevSecOps team advises:

“Treat AI outputs as insecure until proven otherwise. Always audit before production.”

4. Secure, On-Prem AI Tools

Some AI tools now cater specifically to regulated markets:

- Tabnine Enterprise: Fully offline, air-gapped LLM deployment for defense/government

- CodeWhisperer for Enterprise: Built with data retention controls and IAM integration

- Qodo AI: SOC2-certified test generation assistant used by compliance teams

These tools ensure:

- No data leaves your infrastructure

- Every prompt is encrypted and logged

- Suggestions follow corporate style guides and coding policies

5. Incremental Rollouts & Controlled Pilots

Start with small experiments:

- Track AI productivity vs. error rate

- Assess whether governance policies were followed

- Use internal metrics (time to delivery, defect rate, audit pass %)

This gradual approach builds confidence and allows tailoring policies before a full rollout.

Also Read – A Comprehensive Guide to Vibe Coding Tools

Best Practices for Safe Vibe Coding Adoption

If your organization decides to move forward, implement these non-negotiable guardrails:

1. Formal Governance Frameworks

Establish and enforce:

- Which AI tools are approved

- What data is permissible in prompts

- Code ownership rules

- Approval workflows for merging AI-generated code

For example, use the V.E.R.I.F.Y. checklist:

- Validate code correctness

- Enforce coding standards

- Review by humans

- Inspect for security issues

- Format documentation

- Yield audit artifacts

2. Documentation & Audit Trails

Require:

- Prompt logs for all AI-assisted features

- Annotated diffs showing human edits

- Traceability matrices linking requirements → code → tests

Many LLM platforms now support prompt versioning and integration with SDLC tools (Jira, Git, etc.).

3. Code Review & Testing Pipelines

AI-generated code must pass through:

- Static code analysis (SAST)

- Dependency checks (SBOM generation)

- Secret scans (detect hardcoded credentials)

- Dynamic application testing (DAST)

- License scanners (FOSSA, Snyk, etc.)

Use CI/CD tools (e.g. GitHub Actions, GitLab, Jenkins) to automate enforcement.

4. AI Literacy for Developers

Train your team to:

- Write secure prompts

- Understand AI limitations

- Review outputs critically

- Know when to escalate for legal/compliance review

Use internal playbooks or short courses (e.g. Hugging Face AI ethics modules) to onboard teams quickly.

5. Use Enterprise AI Platforms

Avoid free/public tools for production use. Prefer:

- HIPAA-compliant services

- SOC2, ISO 27001, FedRAMP certifications

- Air-gapped or private LLM hosting

Vetted platforms help eliminate uncertainty around security and data exposure.

6. Sensitive Data Handling

Never input:

- PHI (Protected Health Information)

- PII (Personally Identifiable Information)

- Client secrets or proprietary algorithms

Use:

- Synthetic or obfuscated test data

- Prompt filtering tools to block sensitive terms

- On-premises models with restricted access

7. Metrics and Continuous Monitoring

Monitor:

- Time saved vs. errors introduced

- Compliance issue rates

- Prompt reuse patterns

- Developer confidence (via surveys)

These insights inform whether to expand or restrict vibe coding usage.

Strategic Takeaways for Leaders

If you’re a CIO, CTO, or compliance head in a regulated industry, here’s your action plan:

1. Start with Risk Segmentation

Classify software projects into tiers:

- ✅ MVPs and prototypes → safe for vibe coding

- ⚠️ Internal tools → OK with audit

- ❌ Core production systems → require full compliance & oversight

2. Form an AI Governance Task Force

Include reps from:

- Engineering

- IT security

- Legal & compliance

- Audit and quality assurance

Charge this team with:

- Tool approval

- Data protection policies

- Enforcement playbooks

- Regular reviews of AI usage logs

3. Choose Partners with Compliance Track Records

Look for AI vendors with:

- Explicit compliance alignment

- Enterprise security support

- Case studies from other regulated industries (e.g. Tabnine for defense, Qodo for pharma)

4. Align with Evolving Global Regulations

The EU AI Act, U.S. Executive Orders, and upcoming FTC/DOJ guidance are all circling AI governance.

Stay ahead by:

- Creating internal AI use policies now

- Logging and documenting all LLM use

- Adopting transparency and traceability tools early

Conclusion

Vibe coding is not a shortcut to regulated software. But when paired with strong governance, it can:

- Speed prototyping

- Reduce repetitive dev tasks

- Empower non-developers to build tools

- Free engineers to focus on compliance-heavy logic

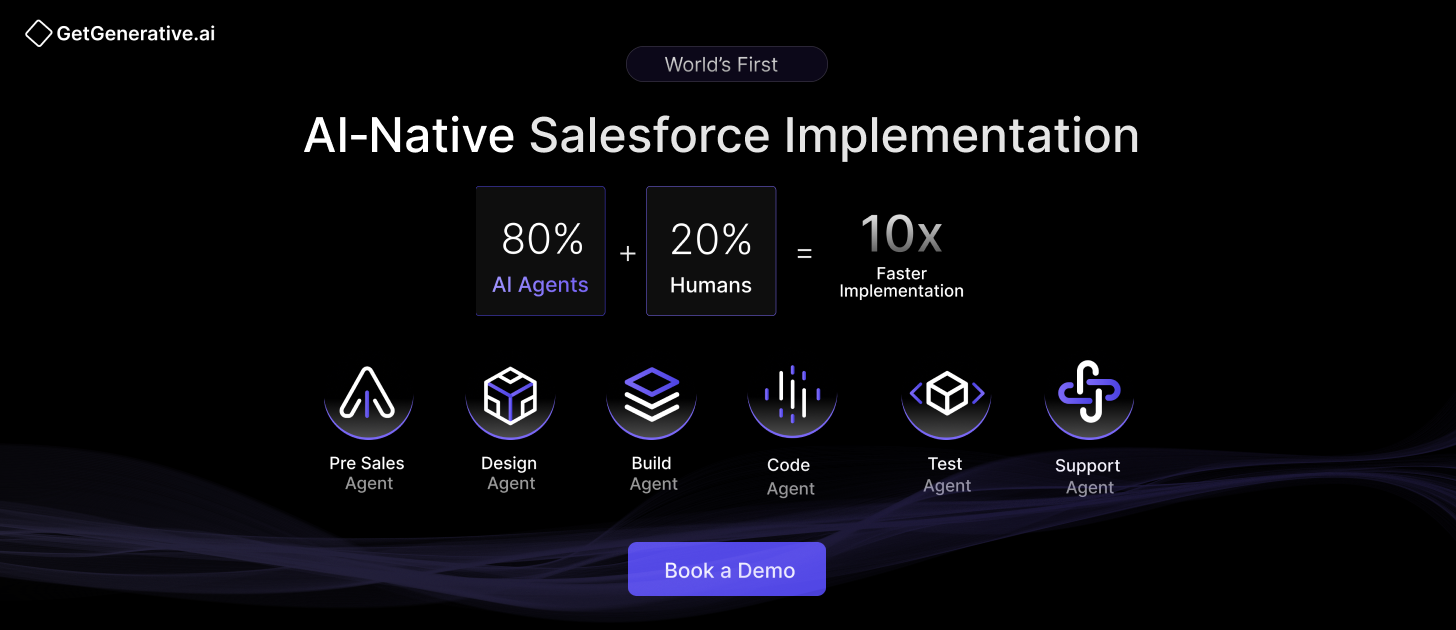

Exploring how to bring AI into regulated Salesforce projects without losing control?

GetGenerative.ai offers an AI-native workspace where teams can design, scope, and build responsibly from pre-sales through go-live.

We’re built from the ground up for Salesforce.

Check out our AI-native Salesforce consulting services!